MPS announces operational use of live facial recognition

The Metropolitan Police Service has announced it will begin the operational use of Live Facial Recognition (LFR) technology for the first time in a bid to crack down on serious and violent crime.

The system is expected to become operational within four weeks. The force said its use of LFR would be intelligence-led with cameras being deployed at locations where serious offenders are most likely to be present.

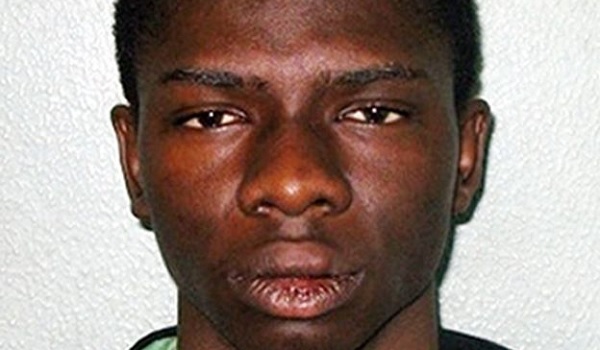

Each deployment will have a bespoke ‘watch list’, made up of images of wanted individuals, predominantly those suspected of serious violence, gun and knife crime and child sexual exploitation. Watch lists will contain up to 2,500 suspects and each deployment is expected to last between five and six hours.

Local communities and groups will be consulted and warned in advance before the cameras are used. Deployments will be overt with signs put up to alert members of the public and leaflets handed out to passers-by to inform them an LFR system is in operation. The watch lists will be destroyed after each deployment.

Assistant Commissioner Nick Ephgrave said: “This is an important development for the Met and one which is vital in assisting us in bearing down on violence. As a modern police force, I believe that we have a duty to use new technologies to keep people safe in London. Independent research has shown that the public support us in this regard. Prior to deployment we will be engaging with our partners and communities at a local level.

“We are using a tried-and-tested technology, and have taken a considered and transparent approach in order to arrive at this point. Similar technology is already widely used across the UK, in the private sector. Ours has been trialled by our technology teams for use in an operational policing environment.

“Every day, our police officers are briefed about suspects they should look out for; LFR improves the effectiveness of this tactic. Similarly, if it can help locate missing children or vulnerable adults swiftly, and keep them from harm and exploitation, then we have a duty to deploy the technology to do this.”

The announcement, which comes amid ongoing legal challenges by civil rights organisations, is likely to be highly controversial. Opponents of the technology have previously complained about low levels of accuracy, the risk of unfair profiling, racial bias and related privacy concerns.

The High Court ruled last year in a landmark judgment involving a trial of facial recognition by South Wales Police that the technology was lawful. The civil rights group Liberty had argued that the use of facial scans was equivalent to the unregulated taking of DNA or fingerprints without consent and called for a total ban.

However, the High Court found that police had applied adequate safeguards and complied with both equality and data protection laws.

In a subsequent ruling, which also covered MPS trials of LFR, the Information Commissioner’s Office (ICO) backed the use of the technology but criticised the lack of a nationwide code of practice or guidelines over its deployment.

The ICO said that while there had been a “reduction in the number of false matches since trials first began in 2017, more needed to be done to reduce technology bias”.

Improvements in the technology mean the MPS says that 70 per cent of those on a watch list whose images are captured by LFR cameras are identified. Only one person in every 1,000 generates a false alert.

Mr Ephgrave added: “We all want to live and work in a city which is safe: the public rightly expect us to use widely available technology to stop criminals.

“Equally I have to be sure that we have the right safeguards and transparency in place to ensure that we protect people’s privacy and human rights. I believe our careful and considered deployment of live facial recognition strikes that balance.”